Guardrails API

WhyLabs Secure: Guardrails API

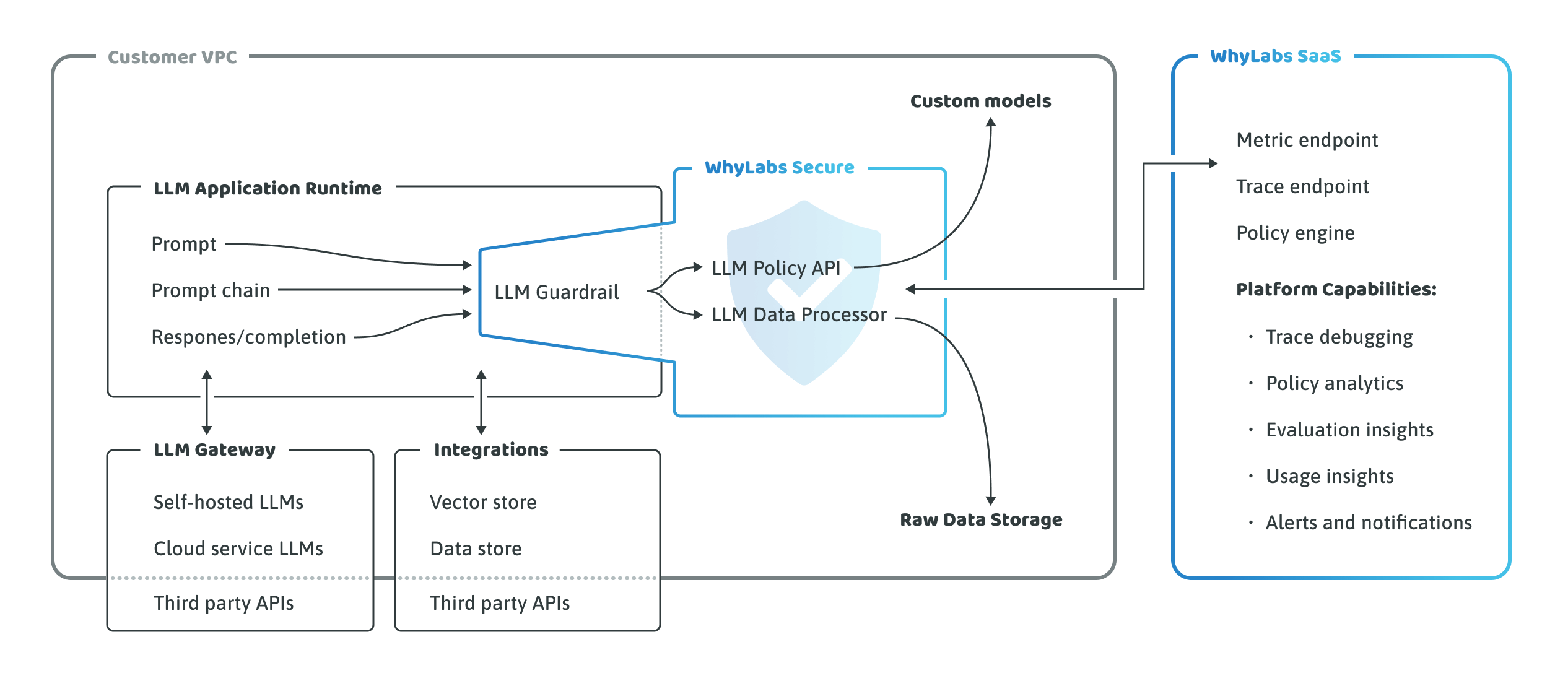

As part of the WhyLabs Secure solution, the Guardrails API is a RESTful API that allows you to interact with the WhyLabs Guardrails service. This powerful tool enables organizations to implement robust safety measures and control mechanisms for their AI and ML systems, particularly for Large Language Models (LLMs).

Overview

You can interact with the WhyLabs Guardrails API through HTTP requests to the available endpoints in any programming language. This flexibility allows for seamless integration with existing systems and workflows.

A key feature of the Guardrails API is its deployment model: it is deployed inside the customer's Virtual Private Cloud (VPC), ensuring data privacy and security. The API connects with the WhyLabs API for two primary purposes:

- Pulling the latest policy definitions

- Pushing metrics and traces for monitoring and analysis

Architecture

The WhyLabs Secure architecture is designed to provide maximum security and flexibility:

Key components of the architecture include:

- Customer VPC: Where the Guardrails API is deployed. Sensitive LLM data is processed within the customer's secure environment.

- WhyLabs API: Central service for policy management and data collection

- Customer Applications: Integrate with the Guardrails API for real-time evaluation and logging

Available Endpoints

Working with the WhyLabs GuardRails API is as simple as making an HTTP request to any of the endpoints below:

1. /log/llm

- Purpose: Evaluate and log a single prompt/response pair using langkit asynchronously.

- Use Case: Ideal for high-throughput scenarios where immediate response is not critical.

2. /evaluate

- Purpose: Evaluate and log a single prompt/response pair using langkit.

- Use Case: For real-time evaluation of LLM outputs against defined policies.

3. /list_metrics

- Purpose: Get a list of available metrics that can be referenced in policies.

- Use Case: Useful for policy creation and management, allowing users to understand what metrics can be used in their guardrails.

4. /debug/evaluate

- Purpose: Evaluate and log a single prompt/response pair against a policy file.

- Use Case: Helpful for testing and debugging policy implementations before deploying them in production.

More details about each endpoint, including request and response parameters, are available in the comprehensive documentation for each endpoint.

Metric Framework

The Guardrails API provides a rich set of metrics for evaluating and monitoring LLM interactions. You can query a list of metrics that a specific version of the container supports by using the /list_metrics API endpoint. This framework allows you to define custom metrics and policies tailored to your specific use case.

The latest version of the container supports a wide range of metrics, including:

- Prompt and response PII detection

- Sentiment analysis

- Toxicity scoring

- Similarity measures

- Text statistics (e.g., character count, token count, readability scores)

- Topic detection

- And many more...

For a full list of supported metrics, refer to the API documentation or use the /list_metrics endpoint specific to your deployment.

Key Features

- Real-time Evaluation: Assess LLM outputs against predefined policies in real-time.

- Asynchronous Logging: Option for high-throughput logging without impacting response times.

- Flexible Policy Management: Easily update and manage policies through the WhyLabs API.

- Comprehensive Metric Library: Access to a wide range of metrics for creating robust guardrails.

- Debug Mode: Test policies in a safe environment before production deployment.

- PII Detection: Identify and protect sensitive information in both prompts and responses.

- Customizable Metrics: Use built-in metrics or create custom ones to suit specific needs.

Conclusion

The WhyLabs Guardrails API, as part of the WhyLabs Secure solution, provides a powerful and flexible way to implement safety measures and control mechanisms for AI systems. With its comprehensive metric library, including advanced PII detection capabilities, it enables organizations to maintain high standards of quality, safety, and privacy in their AI-powered applications.

For more information on how to leverage the Guardrails API in your specific use case, please refer to the detailed documentation or contact the WhyLabs support team.