WhyLabs Optimize

The following is a high level overview of the continuous improvement workflows and features that are included in the WhyLabs AI Control Center. These capabilities are grouped together under the "Optimize" moniker. Please visit the other overview articles in this section to learn about the observability and security capabilities of the platform.

Optimize

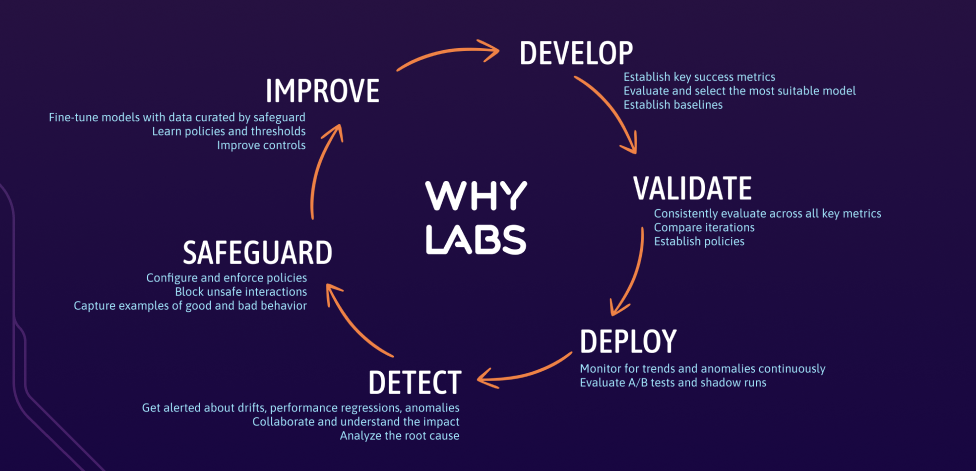

The hard work begins once the AI application is deployed to production. Operating AI applications means creating feedback loops that inform various stakeholders—operations teams, security teams, and product teams—about the health and performance of the application. The WhyLabs AI Control Center enables feedback loops and helps automate a continuous optimization process.

Create feedback loops across the AI lifecycle

While monitoring data drift, performance degradation, and potential security threats is crucial, it's only half the battle. Responsible AI operation means taking proactive steps to prevent these issues, and continuously optimizing your AI application around these measure. To enable this, WhyLabs goes beyond simple observability to provide a comprehensive AI Control Center.

With the AI Control Center, you not only identify but also mitigate issues. It facilitates a continuous feedback loop for ongoing optimization, ensuring your AI system performs at its best.

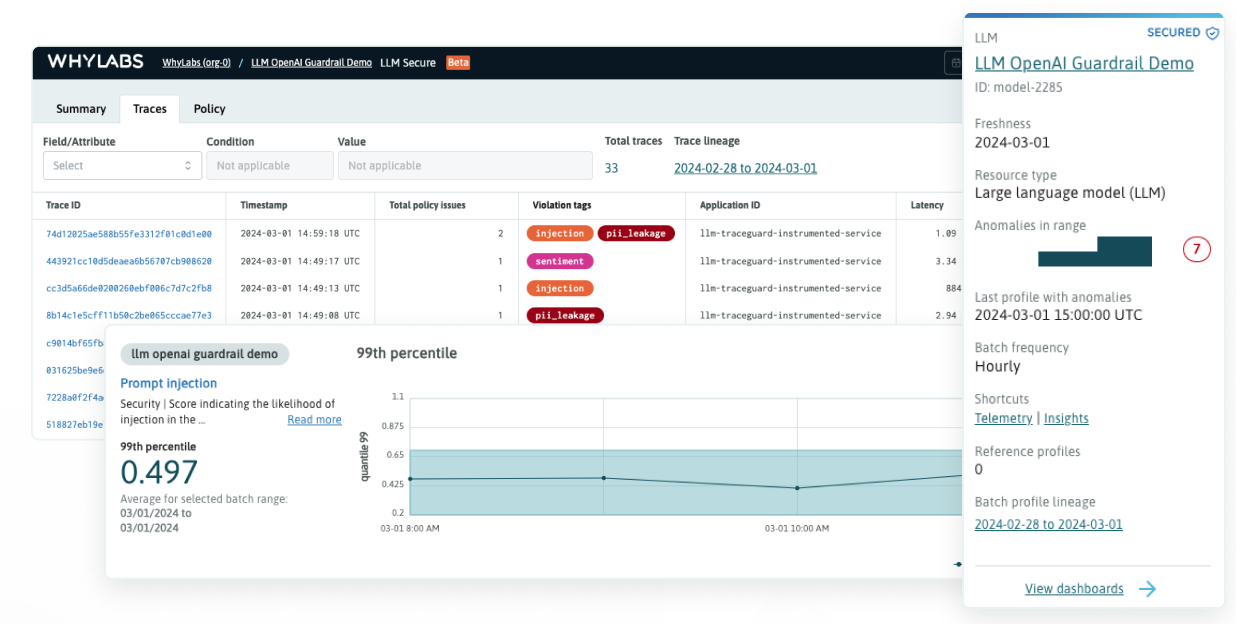

Take, for instance, the platform's LLM-specific features. The trace dashboard offers a holistic view of all LLM interactions including prompts and responses, in addition to RAG event data. In turn, these facilitate the discovery of important insights about your AI application, allowing you to easily optimize the policy rulesets. From there, that enables the monitoring of batch data profiles to be tuned effectively, for tailored model improvement over time.

The OpenLLMTelemetry library is integrated directly into the WhyLabs Secure container which is deployed into the customer's VPC. It enables optimizations as a result of emitting policy ruleset evaluations into the LLM traces, which provide invaluable insights into the LLMs performance.

OpenLLMTelemetry is available on GitHub: https://github.com/whylabs/openllmtelemetry

Actionable Insights for Continuous Optimizations

With WhyLabs Optimize, you can build evaluation datasets, red team datasets, tune policies continuously, choose an optimal model based on performance metrics, and automate your model updates.