Introduction

WhyLabs AI Control Center

WhyLabs is the leading observability and security company trusted by high-performing teams to control the behavior of AI applications.

With the AI Control Center you have tools to observe, monitor, secure, and optimize AI applications. Teams across healthcare, financial services, logistics, e-commerce, and others use the WhyLabs platform to:

- Monitor the performance of predictive ML and generative AI models

- Continuously track ML model performance using built-in or custom performance metrics, with support for delayed ground truth

- Monitor and observe data quality in ML models inputs, feature stores, batch and streaming pipelines

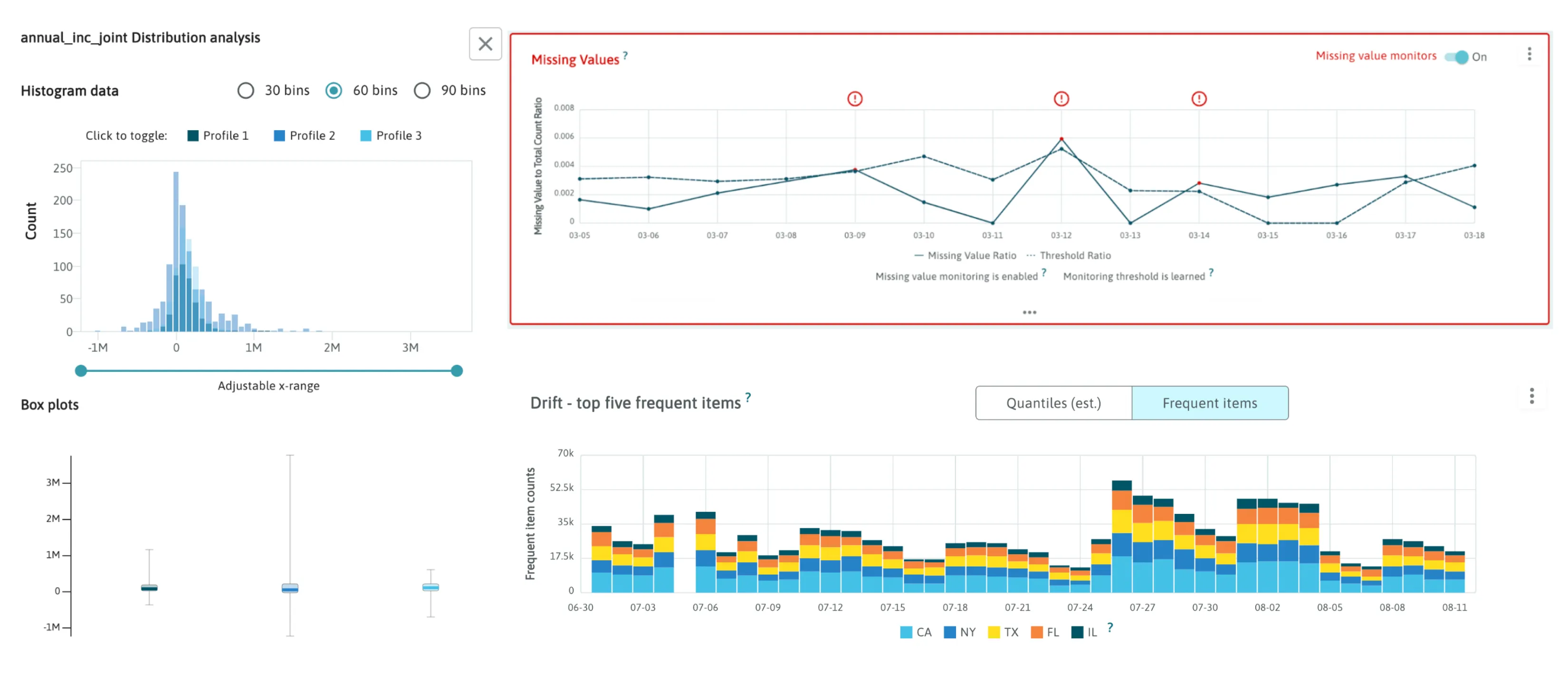

- Detect and root cause common ML issues such as drift, data quality, model performance degradation, and model bias

- Guardrail LLMs with policy rulesets aligned to MITRE ATLAS and LLM OWASP standards using a secure containerized agent, and a proprietary policy manager

- Detect, alert, and control against common LLM issues including toxicity, prompt injections, PII leakage, malicious activity, and hallucinations

- Visualize and debug LLM interactions, including RAG events, using OpenLLMTelemetry

- Identify vulnerabilities and optimize performance via dedicated and customizable dashboards for LLMs traces, security metrics, cost tracking, and model performance

- Improve AI applications through user feedback, monitoring, and cross-team collaboration

Without taking a holistic approach to controlling your AI application in production, risks aren't mitigated, and issues will occur which can cause irreversible damage to your business. Use the WhyLabs AI Control Center to operate your predictive ML and generative AI applications responsibly and securely.

WhyLabs Overview

If you enjoy learning about software in video format, checkout our YouTube channel for workshops and tutorials. Here is one of our most popular workshop videos:

Monitoring your ML models and data pipelines with WhyLabs

WhyLabs is an AI Control Center that prevents data quality or model performance degradation by allowing you to monitor your data pipelines and machine learning models in production. If you deploy an ML model but don’t have visibility into its performance, you risk doing damage to your business due to model degradation resulting from things like data/concept drift, data corruption, schema changes and more. In many cases, data issues do not throw hard errors and can go undetected in data pipelines at a great cost to your business. With WhyLabs, you can prevent this performance degradation by monitoring your model/dataset with a platform that’s easy to use, privacy preserving, and cost efficient.

Three types of data problems

Data problems can be grouped into 3 different categories.

The first are data quality issues. Data pipelines are handling larger and larger volumes of data from a variety of sources and are becoming increasingly complex. Every step of a data pipeline represents a potential point of failure. These failures can manifest themselves as spikes in null values, a change in cardinality, or something else. Worse yet, these failures may be silent ones which result in high costs to your business, even if no hard error is thrown.

Even if you manage to keep your pipeline free of errors, machine learning models are still threatened by data drift. A fundamental assumption of machine learning is that your training data is representative of data a model will see in production. Data drift is a sudden or gradual change in your input data which violates this assumption. Consider an NLP model. Language is constantly evolving. New words are created, and existing words are used differently over time.

Concept drift is another type of issue which is also out of our control. Concept drift refers to a change in the relationship between inputs and their labels. As an example, consider a spam detection algorithm. Spammers are clever and and are constantly modifying their behavior to avoid detection.

After some time, spam messages look very different from what they looked like at the time of model training.

How WhyLabs helps with data problems

WhyLabs prevents all three types of data problems by allowing you to monitor the data flowing through your data pipeline or ML model. WhyLabs takes a novel approach within the Data/ML monitoring space by separating the logging component of monitoring from the analysis and alerting component. This unique approach allows WhyLabs to be easy to use, privacy preserving, and highly scalable in a way that no other platform can be.

How WhyLabs helps with generative AI problems

Many problems can arise when LLMs are deployed to production. In addition to predictive ML models, WhyLabs helps teams run GenAI apps securely and responsibly.

LLMs can produce toxic or biased outputs, and they can be vulnerable to adversarial attacks. They will produce responses that contain hallucinations and errors, even when leveraging RAG context. Unfortunately, these issues often go undetected, causing irreversible damage to customer trust and they can be devastating to the organization's reputation. These failures are common and are costly.

WhyLabs provides sophisticated capabilities to secure and optimize AI applications in production, helping to avoid costly failures. The WhyLabs policy manager provides a guardrail to block and prevent issue in real-time, ensuring that any generative AI application can be controlled proactively. This chatbot failure is an example of what could have been prevented by using WhyLabs.

WhyLabs and whylogs

The WhyLabs Platform is custom built to ingest and monitor statistical summaries generated by the whylogs and LangKit open source libraries. These summaries are commonly referred to "whylogs profiles", and enable privacy-preserving observability and monitoring at scale. whylogs profiles have three properties that make them ideal for observability and monitoring use cases: they are efficient, customizable, and mergeable. whylogs works with all types of data including tabular, images, text, and embeddings.

After you generate whylogs profiles, you can send them to the WhyLabs Platform, which has analytics and alerting capabilities that are key to monitoring your models and pipelines in production. With the WhyLabs platform, you can monitor as many models/pipelines as you need. You can prevent all 3 types of data problems by automatically comparing newly generated profiles to their historical baselines and getting alerted if new data diverges from old data.

More information on whylogs profiles can be found in the whylogs Overview page.